publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- Materialist: Physically based editing using single-image inverse renderingLezhong Wang, Duc Minh Tran, Ruiqi Cui, Thomson TG, Anders Bjorholm Dahl, and 3 more authorsarXiv preprint arXiv:2501.03717, 2025

Achieving physically consistent image editing remains a significant challenge in computer vision. Existing image editing methods typically rely on neural networks, which struggle to accurately handle shadows and refractions. Conversely, physics-based inverse rendering often requires multi-view optimization, limiting its practicality in single-image scenarios. In this paper, we propose Materialist, a method combining a learning-based approach with physically based progressive differentiable rendering. Given an image, our method leverages neural networks to predict initial material properties. Progressive differentiable rendering is then used to optimize the environment map and refine the material properties with the goal of closely matching the rendered result to the input image. Our approach enables a range of applications, including material editing, object insertion, and relighting, while also introducing an effective method for editing material transparency without requiring full scene geometry. Furthermore, Our envmap estimation method also achieves state-of-the-art performance, further enhancing the accuracy of image editing task. Experiments demonstrate strong performance across synthetic and real-world datasets, excelling even on challenging out-of-domain images.

- ReLumix: Extending Image Relighting to Video via Video Diffusion ModelsLezhong Wang, Shutong Jin, Ruiqi Cui, Anders Bjorholm Dahl, Jeppe Revall Frisvad, and 1 more authorarXiv preprint arXiv:2509.23769, 2025

Controlling illumination during video post-production is a crucial yet elusive goal in computational photography. Existing methods often lack flexibility, restricting users to certain relighting models. This paper introduces ReLumix, a novel framework that decouples the relighting algorithm from temporal synthesis, thereby enabling any image relighting technique to be seamlessly applied to video. Our approach reformulates video relighting into a simple yet effective two-stage process: (1) an artist relights a single reference frame using any preferred image-based technique (e.g., Diffusion Models, physics-based renderers); and (2) a fine-tuned stable video diffusion (SVD) model seamlessly propagates this target illumination throughout the sequence. To ensure temporal coherence and prevent artifacts, we introduce a gated cross-attention mechanism for smooth feature blending and a temporal bootstrapping strategy that harnesses SVD’s powerful motion priors. Although trained on synthetic data, ReLumix shows competitive generalization to real-world videos. The method demonstrates significant improvements in visual fidelity, offering a scalable and versatile solution for dynamic lighting control.

2024

-

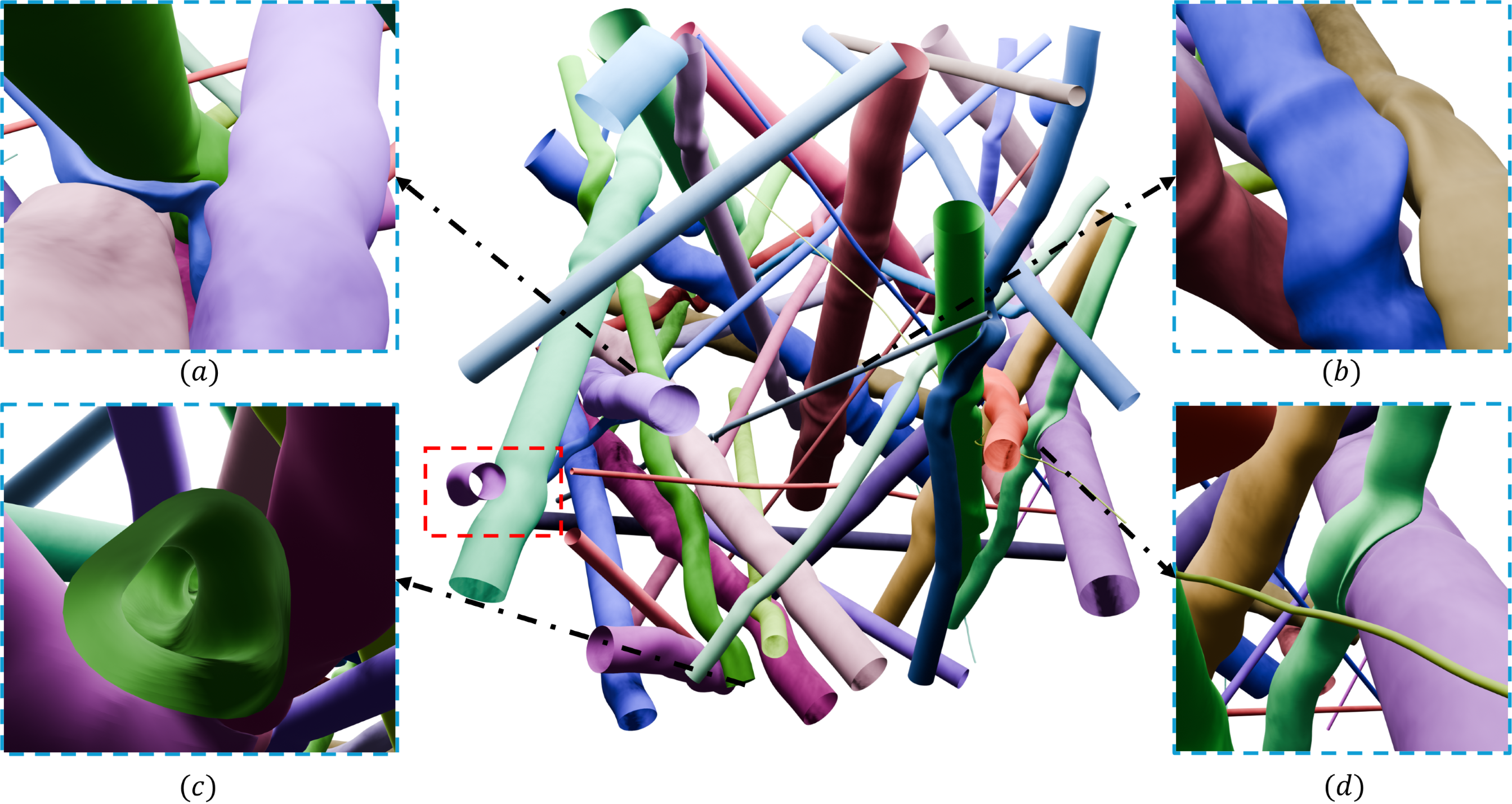

Synthesizing 3D Axon Morphology: Springs are All We NeedRuiqi Cui, J Andreas Bærentzen, and Tim B DyrbyIn International Workshop on Computational Diffusion MRI, 2024

Synthesizing 3D Axon Morphology: Springs are All We NeedRuiqi Cui, J Andreas Bærentzen, and Tim B DyrbyIn International Workshop on Computational Diffusion MRI, 2024The realism of digital phantoms for the white matter microstructure is highly valued. Realistic synthesis provides reliable input to generate synthetic diffusion MRI signals for evaluating biophysical models or training machine learning models of microstructure features, such as axon diameter, shapes, and cellular structures. Inspired by the popular spring-mass systems used in physical simulation, we propose a novel and flexible method for synthesizing axon morphology and its dynamics with physical constraints. Specifically, starting with an initial axon configuration, our method constructs a spring-mass system based on specific sampling rules inspired by the real 3D axons and cell morphology observed in X-ray synchrotron imaging. By minimizing the spring potential energy, our method optimizes the positions of sampled mass points, thereby deforming the axon morphology from its physical surroundings. After the optimization, a triangle mesh of the axon surfaces is obtained and can be used as input for Monte Carlo diffusion MRI simulations. Experimental results demonstrate that our approach successfully mimics a range of axon morphologies and the dynamic environment.

-

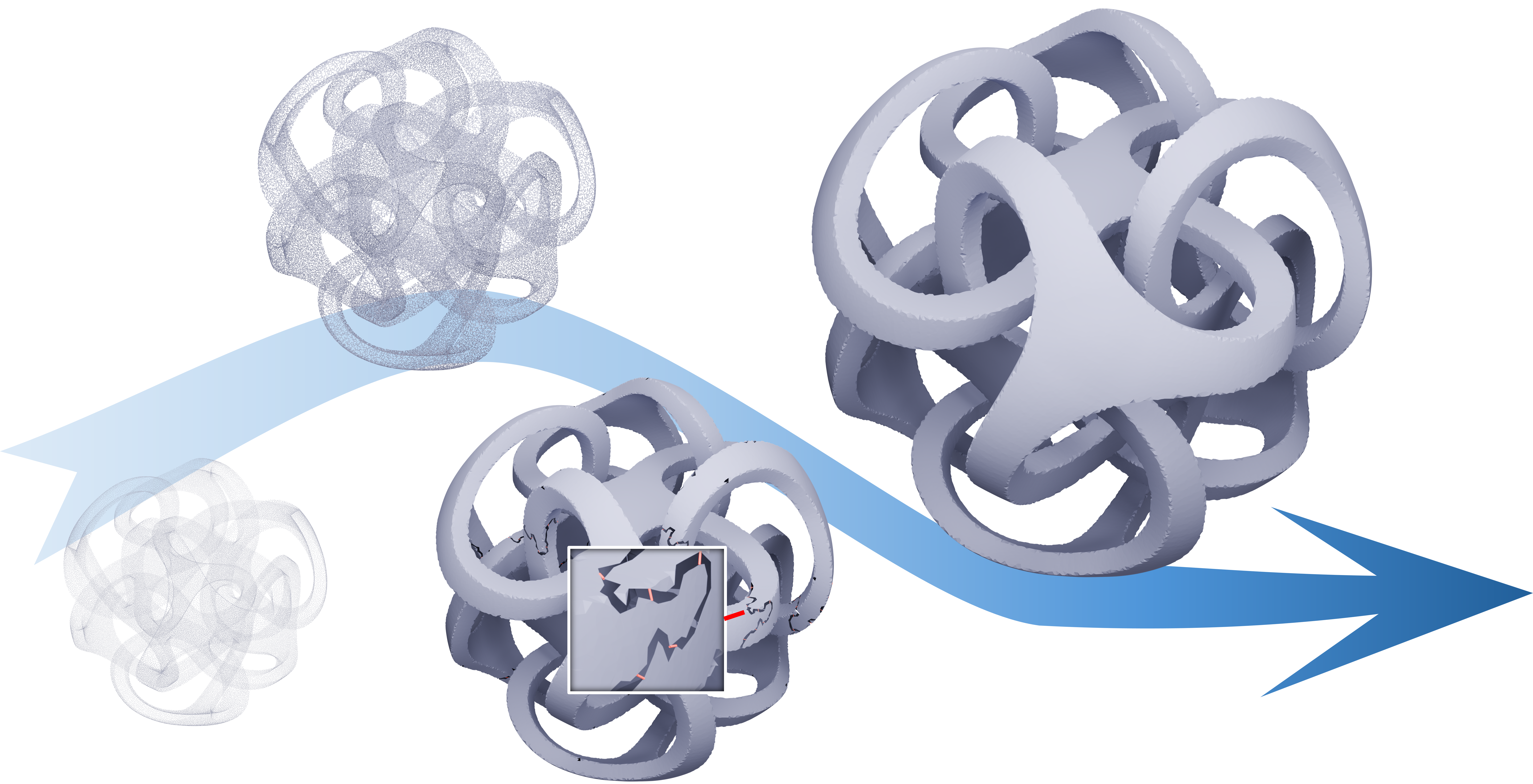

Surface Reconstruction Using Rotation SystemsRuiqi Cui, Emil Toftegaard Gæde, Eva Rotenberg, Leif Kobbelt, and J Andreas BærentzenACM Transactions on Graphics (TOG), 2024

Surface Reconstruction Using Rotation SystemsRuiqi Cui, Emil Toftegaard Gæde, Eva Rotenberg, Leif Kobbelt, and J Andreas BærentzenACM Transactions on Graphics (TOG), 2024Inspired by the seminal result that a graph and an associated rotation system uniquely determine the topology of a closed manifold, we propose a combinatorial method for reconstruction of surfaces from points. Our method constructs a spanning tree and a rotation system. Since the tree is trivially a planar graph, its rotation system determines a genus zero surface with a single face which we proceed to incrementally refine by inserting edges to split faces and thus merging them. In order to raise the genus, special handles are added by inserting edges between different faces and thus merging them. We apply our method to a wide range of input point clouds in order to investigate its effectiveness, and we compare our method to several other surface reconstruction methods. We find that our method offers better control over outlier classification, i.e. which points to include in the reconstructed surface, and also more control over the topology of the reconstructed surface.

2023

-

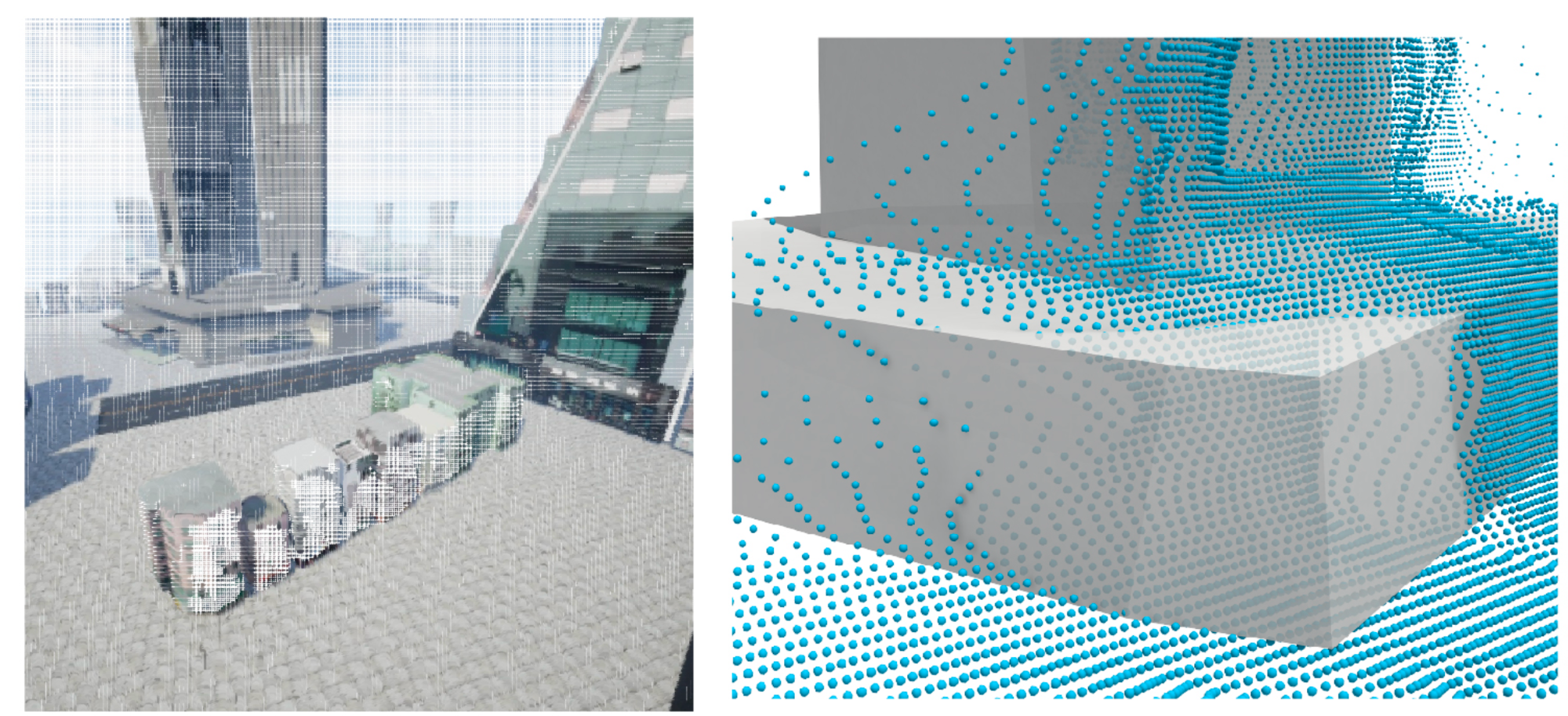

PA-Net: Plane Attention Network for real-time urban scene reconstructionYilin Liu, Ruiqi Cui, Ke Xie, Minglun Gong, and Hui HuangComputers & Graphics, 2023

PA-Net: Plane Attention Network for real-time urban scene reconstructionYilin Liu, Ruiqi Cui, Ke Xie, Minglun Gong, and Hui HuangComputers & Graphics, 2023Traditional urban reconstruction methods can only output incomplete 3D models, which depict the scene regions that are visible to the moving camera. While learning- based shape reconstruction techniques make single-view 3D reconstruction possible, they are designed to handle single objects that are well-presented in the training datasets. This paper presents a novel learning-based approach for reconstructing com- plete 3D meshes for large-scale urban scenes in real-time. The input video sequences are fed into a localization module, which segments different objects and determines their relative positions. Each object is then reconstructed under their local coordinates to better approximate models in the training datasets. The reconstruction module is adopted from BSP-Net [1], which is capable of producing compact polygon meshes. However, major changes have been made so that unoriented objects in large-scale scenes can be reconstructed efficiently using only a small number of planes. Experi- mental results demonstrate that our approach can reconstruct urban scenes with build- ings and vehicles using 400∼800 convex parts in 0.1∼0.5 second.

2021

-

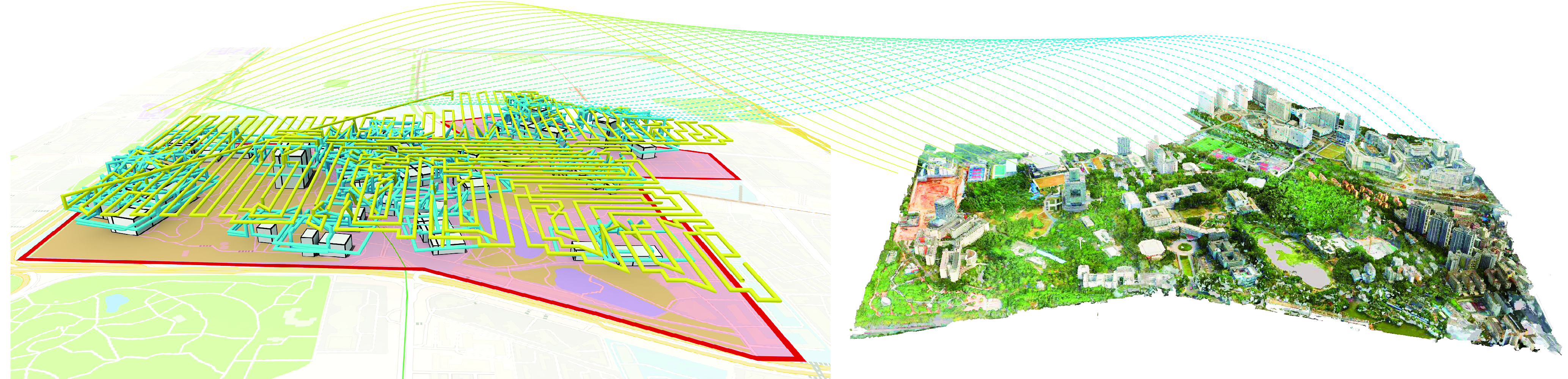

Aerial path planning for online real-time exploration and offline high-quality reconstruction of large-scale urban scenesYilin Liu, Ruiqi Cui, Ke Xie, Minglun Gong, and Hui HuangACM Transactions on Graphics (TOG), 2021

Aerial path planning for online real-time exploration and offline high-quality reconstruction of large-scale urban scenesYilin Liu, Ruiqi Cui, Ke Xie, Minglun Gong, and Hui HuangACM Transactions on Graphics (TOG), 2021Existing approaches have shown that, through carefully planning flight trajectories, images captured by Unmanned Aerial Vehicles (UAVs) can be used to reconstruct high-quality 3D models for real environments. These approaches greatly simplify and cut the cost of large-scale urban scene reconstruction. However, to properly capture height discontinuities in urban scenes, all state-of-the-art methods require prior knowledge on scene geometry and hence, additional prepossessing steps are needed before performing the actual image acquisition flights. To address this limitation and to make urban modeling techniques even more accessible, we present a real-time explore-and-reconstruct planning algorithm that does not require any prior knowledge for the scenes. Using only captured 2D images, we estimate 3D bounding boxes for buildings on-the-fly and use them to guide online path planning for both scene exploration and building observation. Experimental results demonstrate that the aerial paths planned by our algorithm in real-time for unknown environments support reconstructing 3D models with comparable qualities and lead to shorter flight air time.

2020

- Statistically driven model for efficient analysis of few-photon transport in waveguide quantum electrodynamicsRuiqi Cui, Dian Tan, and Yuecheng ShenJOSA B, 2020

Understanding transport properties in quantum nanophotonics plays a central role in designing few-photon devices, yet it suffers from a longstanding extensive computational burden. In this work, we propose a statistically driven model with a tremendously eased computational burden, which is based on the deep understanding of the few-photon spontaneous emission process. By utilizing phenomenological, statistically driven inter-photon offset parameters, the proposed model expedites the transport calculation with a three-order-of-magnitude enhancement of speed in contrast to conventional numerical approaches. We showcase the two-photon transport computation benchmarked by the rigorous analytical approach. Our work provides an efficient tool for designing few-photon nano-devices, and it significantly deepens the understanding of correlated quantum many-body physics.